Why I Am Seeking to be an AI Optimist

Because I am a Realist

Below is a book cover for a book I released in 2018, which is indicative of the wariness I was feeling about AI’s impact on jobs, starting in 2017, on the heels of writing a book about marketing automation in 2015.

And the relevant bit is the tsunami image. I don’t take tsunamis lightly - I remember when 100,000 people died in Aceh, Indonesia — and I was profoundly struck by the tsunami in Japan, including this kind of footage, which captured the waters rising.

I was trying to capture the aspiration by 2018 for me to be an optimism, and to recommend seeking to surf the tsunami, rather than be overcome by it. At the time, I had the sense that many jobs would be lost, based on the rise of the power of AI, and not seeing how it would do anything but continue to grow in power and capability.

My reflex wasn’t negative per se, I was just starting to look into the data, as well as economist’s predictions, both alarmists and optimists and everything in between. What I was seeing though was a lot of speculation, and very little data. So at the time I was just starting to dig. By the time I released the book, I basically advocated to consider three options: adapt, adopt, adept - meaning, adapt to AI is an options, wait and see, keep track. Adopt is an option: start using AI platforms, AI-powered tools. But it felt then that ultimately the best thing to do would be to become adept in AI.

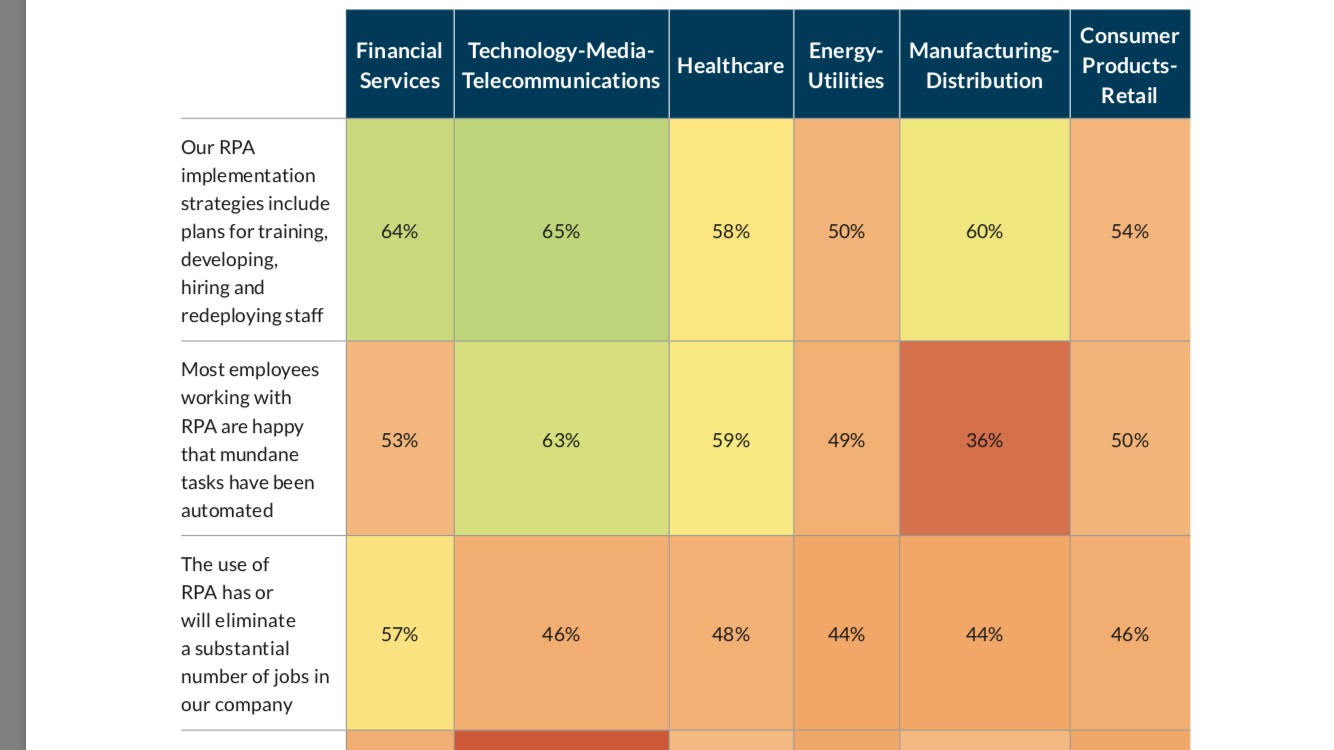

At the time and until 2022 all I really had was my arguments in the book, trending analysis, as well as some very hard to come by data from 2019, which already showed how companies were self-reporting significant head count reduction from automation of white collar work, across industry verticals, and expected this to continue.

This piece of data was consistent with trending on the likelihood of unpredictable but likely advances and the economics of how automation services are sold based on saving money, including especially during times of duress. I received tenure in March of 2020 about the time the pandemic was gearing up, and partly what I was watching during the pandemic was the increase of automation, both in the office and physically.

To be clear I’ve never been against automation per se, I just regret the job loss and layoffs, and have wondered how to advise students and colleagues. Part of my question was to analyze the claims that “just like other transformations, AI and automation will lead to a net increase in jobs”. I was pretty skeptical about this — but who am I compared to x y z prominent economists and others? Still, I made preliminary guesses, spent a lot of time studying AI economics, talking to economists, working with them to understand things, and developing expertise enough to have more credibility. I did headline at a NYC AI Summit and gave a presentation with the themes of Surfing the Tsunami to c-level execs and others, and gradually built more expertise, in spite of the fact that I don’t teach at a top tier university. It didn’t really matter - my main purposes was to try and better inform my students, and then share the results with others. I never really found a connection beyond posting on linkedin, probably because of my lack of pedigree — I tried. But I did at least learn, and continue researching. I persisted, gathered evidence, shared it with my students and at my university - and on LinkedIn.

Then by the time ChatGPT came out, things were clearer, but there was still the familiar refrain of “AI augments”, and I like what Kyla Scanlon said fairly recently in an interview with Ezra Klein - she actually used the tsunami analogy, arriving it on her own, and was talking specifically about Gen-Z. But she also referred to some of the optimism/denial as “fanciful thinking” - in terms of job loss. Only a month or two later, around the time of writing, August 2025, it is becoming clear, in mass media, prime publications, that there will be significant job loss. What mystifies me is that the job loss that already has happened has not really been reported. It’s certainly there. Glad to share it with you if you want to connect with me on LinkedIn and message me. I’m going to gather various documents I’ve made and put them on my site, I just haven’t gotten around to it.

The main thing I’ll say here is that you can infer the scale of job loss, even just part of it, by looking at companies like ServiceNow, their market cap, and figure out how much revenue they make, how much they charge for software agents, and estimate how much work they do relative to humans, and look at the company’s growth, and the sector growth. I fed a lot of that kind of data and considerations into AI, and the estimate of job replacement is quite large. Part of the reason it is not recognized more widely is because companies don’t necessarily have to report it, not as a federal matter. There are things like the WARN act but there are loopholes, and the reporting and data is a patchwork.

So bottom line there has been a lot of job loss from AI, and it will continue to grow, for a variety of reasons.

However - if you face this, and accept this - in part as a reason to wake up and be ready to take action, and not be complacent about whatever your profession and career plans are, and your job security - then you can also recognize that there is significant opportunity.

This next image is an article on www.learnskills.tech that is an interview indicative of my aspirations towards optimism, and passing along from time to time in AI classes I teach and on Linkedin, the affirmation that there is tremendous opportunity.

I probably should have used a different wave image, but partly the idea was to look at the original art/image and emphasize the “surfing” part of the tsunami. Maybe one of the great curling waves that surfers like in certain surfing spots.

This post is a long-winded way of saying that I started recognizing that I was probably emphasizing the negative aspects of AI too much, to the exclusion of level-headed pragmatic discussion of how to learn it, plus profiling people who are doing AI and loving it. I did go as far as discovering an ML engineer that used to be a copywriter, and I love that interview because it shows a person whose profession was disrupted by AI, being pragmatic and learning AI, and becoming an AI engineer.

I wondered if I should advocate that anyone/everyone learn as much AI as they could, simply because I wasn’t seeing any evidence that there was something that AI couldn’t automate. In other words, eventually it seems like AI could automate anything. And especially with LLMs and OpenAI/etc., the power is leaping forward in a way that is also spawning any variety of startup applying AI to just about any kind of task. One of the many analyses I did in the past year was simply to pose the question directly to AI about what is being automated, and is there anything that can’t be automated. It was very interesting to see some admission by AI about this, but with qualification - yet when asked outright if there were any startups working on supposedly safe areas that the chatbot indicated might be safe - it did verify that - it was surreal to go several rounds of this: securing admissions and examples of startups automating certain things, then getting disclaimers and areas that were not automated yet, then feeding that very same list of areas and asking what startups were automating those areas, and getting admissions. You can ask me for this conversation, and I will post it eventually on my site.

It’s not that it will happen immediately, but it can happen a lot sooner than people realize, because so much of work can be automated, and because so much money is being invested in the pursuit of profits around AI. It is what it is. When you look at the implications, the economics say that a company that deploys more AI will likely be able to generate more profit and be more competitive, and then others will follow. The same applies to national economies. In the U.S. there is little likelihood of any regulation that prevents companies from adopting AI, and laying off people, or even reporting layoffs from AI; but there are some states that are starting to explore this. The economics alone are likely to keep this momentum going. I could be surprised, but I would not count on regulation that significantly reduces the adoption of AI.

And even if it did happen - if AI were taxed, which is not likely to happen, or if it were regulated or had strict, enforced reporting, in the U.S. — then other countries would be likely to dive deeply into AI, gain economic advantage, and force the U.S. to adjust its policies. It’s not clear whether tariffs or trade regulations could prevent this. But at the present time, American tech companies are actually pressuring other countries such as in the EU not to regulate AI - and getting support from the U.S. government. This has been pursued partly out of the rationale of these companies needing to be able to lead in AI, etc. — whatever the reason, regulation is not likely. Hence AI adoption is likely to continue. This is a realist acceptance.

Then came vibecoding apps - rising to prominence since the beginning of 2025, which leapfrog AI engineers and tech professionals: vibecoding apps like Replit, Cursor etc. make it possible for anyone to make very sophisticated apps, including the use of AI, based on prompting just like you do to a chatbot. There have been some unintended sife effects, such as security holes in rapidly built DIY apps that inadvertantly left data exposed, but that hasn’t stopped the onrush, and security issues can be fixed. So now you have a situation where you don’t even need to be an AI engineer to do advanced apps.

This has led to an interesting and undeniable opportunity for entrepreneurs, to develop solutions without necessarily having to know AI. If you know a field, or learn a field, then chances are there are problems that are still ones that can be solved, that have been overlooked, or aren’t large enough that some company has solved them yet. Or there might be an expensive solution but now a local entrepreneur might be able to make their own solution and price it much cheaper. Because they didn’t have to raise money enough to hire AI engineers or software developers etc. — and there are already examples of this kind of seizing opportunity. Leapfrogging over the entire spectrum of automation of professional tasks to the point where you can prompt to make an app - a very sophisticated app. And then make money.

This is one reason I am seeking to shift towards optimism, or in an optimistic direction. I am still a realist, but it feels like I’ve done what I can, with the position I’m in. I don’t really have a platform to do much further, and this issue has not been the focus of my career - just one of the themes. Over the last ten years it has been enough for me to do what I’ve been advocating for: to evolve, and accept where things are headed, and learn everything I can about AI. I have deep digital marketing expertise, was teaching digital marketing when I started to wonder (in 2017) about how long it would be until digital marketing jobs I was preparing people for would be automated. My conviction increased as I learned, and now I am teaching applied AI courses, meaning a mixture of using the new generative AI tools, but also getting exposure to coding and the pre-requisites to study AI at a master’s degree level.

In terms of my students, or colleagues who I advocate learning AI to - meaning anyone on linkedin - anyone else for that matter - I definitely believe that vibecoding tools like Replit are important. Still — because they are easy to use, it means that a lot more people will be using them. However - it is still an AI-powered platform, and a particular application of AI, meaning using AI to automate app creation. But there is still a demand for AI engineers (in spite of AI being increasingly automated), and to me the definition of AI engineer is changing. There are the people who get PhDs or learn AI on their own to the point where they can work at an AI company, and make the tools that other people use. They are getting really high salaries. But because of the vibecoding apps, entry level AI is harder to get into. Meaning not using generative AI tools, but becoming an AI or machine learning engineer.

For reference see the interview with Wendy Aw on learnskills.tech - that kind of AI engineer. There is demand now, there will be more demand in the future. But it feels like the best job security in wbite collar work at least is likely to be learning a field, or knowing a field, and then applying AI to that field. If you know “only AI” there is something to that. But knowing a particular business field, ot other field, allows you to help design AI solutions with more sophistication. It could very well be some kind of app - but it could be some other use of AI, such as analyzing data for healthcare or science, or some other field. Vibecoding apps don’t necessarily integrate domain expertise or replace relationships that humans still have in business networks. Technically this kind of expertise can be embedded. But for the person who is wide awake, this seems the strongest path.

So recently there was a headline about computer science grads having a harder time getting jobs, and one of the reasons being the rise of automated AI coding assistants. In a linkedin post I suggested the answer is to learn everything you can about AI, and learn how to apply it to a particular field.

This has been still a somewhat negative discussion, but my journey is to come to the conclusion that AI as already replaced many jobs, that this will increase, and maybe at this point my focus in advocacy can maybe do more good by working on inspiring people in a positive way, with the opportunity.

Some say in marketing and sales, and in startups, that a pain pill is stronger than a vitamin. That is, there is stronger demand for something that solves a problem, than something that “enhances”. There are better explanations about this, but when consumer spending is stretched, people reduce spending to what is most valued, and what is most necessary. Businesses do the same. Discretionary spending gets cut. "non-core” positions get reduced.

When I have considered my students and colleagues, and given the “fanciful thinking” out there, and assertions that AI has not and will not destroy jobs, or that many new jobs will be created, it has felt like I need to emphasize the consequences of not taking AI seriously. But having amassed a body of research and the vantage point of charting it out and accepting it, and feeling like I can’t do much more, and now that it is being much more publicly admitted - it feels time to emphasize the optimistic part.

So in a class or conversation, I still might refer to something I’ve already written, or a list of articles or third party references or commentary, if a person is not convinced about the disruption. I might bring it up. But I feel like it is time for be to swing in the direction of optimism, and emphasize the opportunity - the vitamin, per se.

Thanks for having a read. Feel free to message me on linkedin if you had a question or wanted to see any of the evidence. Don’t be shy. And if you did enjoy this, or it was meaningful, please go find the post on linkedin, around 8/12/25 or so. Eventually I’ll find a link and put it here. Consider liking the post or reposting it so someone else can take a look at this and some of the other posts I’m making on Substack, if they want to get more extended thought.

Ah yes! So the picture at the beginning - to me this is optimism. A smile, and a medevac helicopter in the background. Something good. Helping people, having fun. Riding in helicopters and taking some flying lessons has been great fun for me. It is thrilling. That is the spirit I would like to learn how to embody and pursue in hopefully inspiring students and others to want to pursue AI.

Note: at the time of writing, it feels like there are some areas that have nothing to do with AI that could be reasonably secure, in theory: the trades (construction, plumbing, welding, electricians, etc.); public school teaching (until/unless vouchers and/or online alternatives to public schools gain prominence); caregiving, psychological or physical therapy, some healthcare roles. Some areas of hard science, and engineering. Things that are less likely to be done by robots in a residential setting, and areas that take more learning. AI is used in hard science and engineering, but there’s still opportunity for fundamental research. Hopefully people using AI in healthcare and pharmaceutical companies, biotech, will help to save many lives. Engineering is also something that has a lot of opportunity, where AI tools are used, but which requires people still: chip design, aerospace, other kinds of engineering. Principle: the more work it takes to get there, the less people will do it, and to some extent, the more job security and opportunity there is. Some hard sciences and engineering feel like this.

Otherwise, for white collar work: learn AI. and learn to apply it to a field. Start with vibecoding apps and be aware of them. Then go as far as you are willing to. Learn all the things AI can do: computer vision, predictive analysis, simulation, etc. etc.

Best wishes.